At the core of Bitcoin’s scaling debate has been the block size limit. For better or worse, the majority of the discussions about scaling in the Bitcoin community have mostly been focused on this one variable.

As an oversimplification of the current debate: Some would like to see an increase in block size which would enable more on-chain transactions per second; others would like to see the block size limit remain low in an effort to limit the cost of operating a full node while moving some types of payments above the base Bitcoin protocol to secondary layers such as the Lightning Network and sidechains.

In early 2016, a research paper was released in which the relationship between increased on-chain capacity and increased costs of operating a full node were explored. The paper, titled On Scaling Decentralized Blockchains, explained that blocks must not exceed 4 megabytes if the goal is to prevent more than 10 percent of the full nodes on the network from being overwhelmed by the demands of the network. This 4 megabyte metric is often brought up during discussions around the proper block size limit for Bitcoin.

According to Christian Decker, an infrastructure tech engineer at Blockstream and one of the co-authors of the paper, Bitcoin network communication has improved over the past few months which, the paper explained, has affected the tradeoffs.

Bitcoin Magazine reached out to Decker to gather more information related to what the Bitcoin network can handle today in terms of block sizes.

Clearing Up Misconceptions Around the Study

Decker first wanted to clarify a common misconception of the research presented in the paper on scaling decentralized blockchains:

“Our results are not binary, in that they are not showing a threshold up to which nothing happens and going just beyond [which] bad things start to happen,” Decker explained. “What we do is show that there is a tradeoff between block size and the guarantees that confirmations give us.”

According to another paper co-authored by Decker, titledInformation Propagation on the Bitcoin Network, an increasing block size will also increase the blockchain fork rate, which means confirmations in the current version of the blockchain become less reliable for users.

“What we can compute are upper bounds on block size, after which the system will definitely become unstable, e.g., never converge to a unique ledger state,” Decker continued. “However the tradeoff between block size, confirmation guarantees, and centralization pressure is continuous: Even small changes have an effect. We show that the tradeoff exists and that it must be taken into consideration, but the decision about what the sweet spot in this tradeoff is, is a political issue and less a technical one. If network participants are happy to have a less decentralized network in exchange for an incremental transaction rate increase, that's their decision.”

While Decker said he is convinced that a moderate block size increase is possible without incurring too many negative effects, he also noted that the precedent set by such a move needs to be taken into consideration.

“There are natural limits to the size that the network can support, namely our worst case thresholds, beyond which we cannot go,” Decker stated. “An increase today could signal that in case of block space contention we can always just increase the block size, which is definitely not true.”

Improvements to the Bitcoin Network

According to Decker, the fundamental analysis provided by On Scaling Decentralized Blockchains is still relevant, but there have been incremental improvements achieved over the past few months that have had a noticeable effect on the tradeoffs described in the paper. Decker pointed to Bitcoin Core contributor Matt Corallo’s work on the FIBRE relay network and Compact Block Relay as specific contributors to network communication improvements.

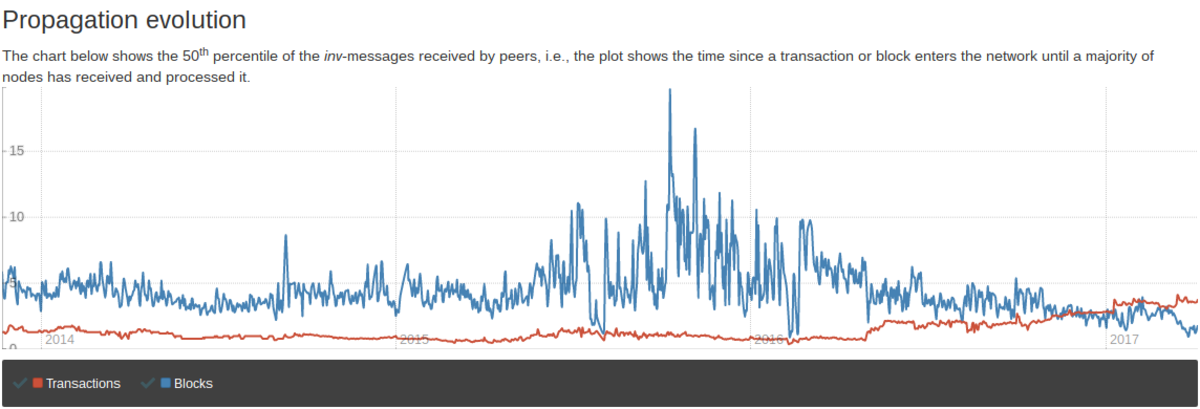

Decker also runs BitcoinStats.com which tracks the efficiency of information propagation around the Bitcoin network. He recently updated the site with new data that, in his view, shows the propagation of blocks around the network is much faster than it was before Corallo’s improvements were implemented.

According to Decker, a comparison of block propagation times between now and one year ago show that a 3 megabyte block today has an equivalent propagation time of a 1 megabyte block from one year ago. It took a 1 megabyte block 6.5 seconds to reach half of the network a year ago, while it takes roughly 2 seconds today. However, Decker added that size increases have increased longtail propagation time, which means it now takes longer for a block to reach every single node on the network.

“Both [FIBRE] and [Compact Block Relay] employ forward error correction to reduce the amount of data to be shipped around the world and recombine blocks from multiple sources instead of relying on a single source,” Decker explained. “This creates geographically distributed seeds from which the blocks are then forwarded to the remaining peers.”

In the past, Corallo and BTC.com’s Kevin Pan have told Bitcoin Magazine that FIBRE and Compact Block Relay have also led to a decline in the number of empty blocks mined on the network.

What Can Bitcoin Handle Today?

At this time, further analysis on what impact the implemented network improvements have had on Bitcoin’s ability to handle blocks larger than 4 megabytes has not been made available. “I am continually monitoring the network propagation, and need to aggregate them and re-evaluate our analysis,” Decker said.

“What is safe strongly depends on the requirements of the user, the tradeoff is still valid, it just shifted slightly due to the increased efficiency,” Decker added.

According to Decker, extrapolating a new throughput limit based on the improvements in how efficiently data is shipped around the world may be simplistic. In other words, a threefold improvement in network communication does not necessarily mean the 4 megabytes number in the original paper would be 12 megabytes if it were written today.

“These one-time efficiency increases have certainly moved the throughput limit at which bad things definitely happen upwards, but whether it is at 12 megabytes, I'm not sure,” explained Decker. “As I mentioned, it's not a binary threshold, just an upper limit, and putting a number on it just does not mean that lower values are safe.”

In conclusion, Decker added that there are some types of transactions that will never make sense as on-chain transactions due to the intrinsic costs involved. “There will always be use-cases which are not fit for on-chain payments.” he stated. “There is also a privacy increase in using off-chain transfers, since they no longer leave a permanent trace in the blockchain.”

In Decker’s view, off-chain protocols, such as the Lightning Network or Duplex Micropayment Channels, are desirable for long-term scalability because they evolve much faster and do not rely on consensus from the entire ecosystem to enable.

“I think Segregated Witness is both a malleability fix, enabling these higher level protocols, and a safe block size increase, which does not set the dangerous precedent of just bumping the block size [limit] when there is block size contention,” Decker stated.