Finance Redefined is Cointelegraph's DeFi-centric newsletter, delivered to subscribers every Wednesday.

The Alpha Homora and Cream Finance hack has made a gigantic mark in the DeFi space this week.

It is the largest single hack in DeFi history at $37 million in funds stolen. It is also one of the most complex, apparently leveraging several honest-to-God vulnerabilities in Alpha Homora. A few missing input checks in very specialized conditions allowed the hacker to abuse Alpha Homora’s privilege of borrowing an unlimited amount of funds from Cream Finance’s Iron Bank. Flash loans were of course involved, but unlike some previous hacks like Harvest Finance, this doesn’t seem to have been a purely economic exploit.

News of the hack had a very negative impact on prices for all the protocols involved in the hack, including Aave for some reason. Looking more generally at the DeFi Perp on FTX, there is a clear peak right on Feb. 13 when the hack happened.

Perhaps some of that is just normal market action, but overall it’s looking as if the hack single-handedly put an end to the DeFi season, for now.

Auditors feeling the heat

As any protocol reaching any kind of mass adoption today, Alpha Homora was audited by Quantstamp and PeckShield, both of them skilled and respectable firms.

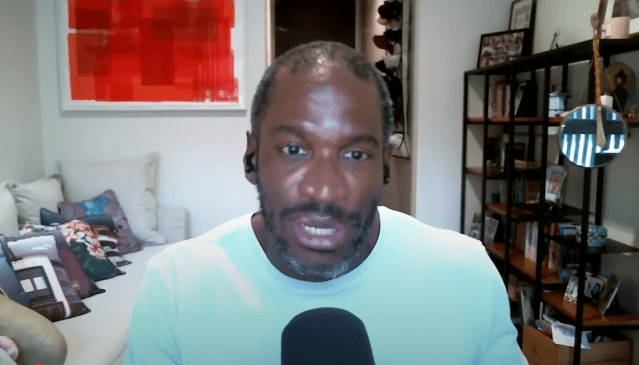

Yet, the details of the hack led some to suspect it was an inside job, potentially by someone at these auditing firms. Yearn.finance core developer Banteg mentioned how the details of the hack were so obscure that it was extremely unlikely anyone figured it out just by looking at the contracts. Notably, the pool attacked by the hacker was unannounced and unused, which is what allowed the hack to occur in the first place.

While there were no public accusations, the incident triggered yet another discussion of why auditors failed to catch the bug, whether they are properly incentivized, and how this situation can be mitigated.

The anatomy of a complex hack

As a former bug bounty hunter, I really do believe that the auditing ecosystem is about as “incentive-aligned” as it can be. Auditing companies risk their reputation every time a major bug like this slips through their nets. Enough of these in quick succession and nobody will trust that business anymore. Auditors have all the motivation to find everything they can, it’s just that sometimes they realistically cannot do so.

An audit is a limited-time contract during which a team of experienced security engineers combs through the code in search of anything that looks suspicious. Keywords here are “limited-time” and “in search of anything.”

I can say from personal experience that a bug like the one we had right now is not something you can casually find by looking at the code. Finding a multi-step, complex bug like this is an iterative process. It starts with you stumbling on that one weird thing that’s not acting as it should. For example a website forgetting to check if you’re actually logged in when performing a certain task. You take that nugget and ask yourself, “how can I exploit this?” You come up with ideas, scour the platform for other weak points and see if you can combine them somehow. Most of the time you don’t actually find anything and that weak point remains unexploitable.

But with days of focused work, multiple trials and errors, sometimes you do figure out how to exploit the initial issue. When it happens, it’s always a combination of factors that alone seem irrelevant, but taken together they fit into a nasty puzzle.

The focus and dedication required to find most of the bugs that resulted in major hacks is something that goes beyond the scope of an audit. If they were to chase every single lead with the time they had, they would quite literally waste so much of it that they’d fail to find the easily exploitable and obvious things. Not to say that auditors never find complex bugs, but it’s unreasonable to expect them to find everything. And if an auditor really did find the Alpha Homora bug and withheld it, there are deeper issues at play than economic incentives.

How to secure DeFi

The issues with audits mean that projects should launch bug bounties to find really complex bugs. They have no time limits, many more eyes scouring the platform, and the pay is results-based — much more efficient than paying auditors more work hours in the hope they’d find something.

Most understand the power of bug bounties by now, although of course Alpha Homora did not have one. But projects like Yearn.finance do, and they got hacked all the same.

Sometimes these things just happen. Crypto carries the problematic combo that actually exploiting a bug for money and getting away with it is really easy, while the infrastructure is unlike anything else hackers have seen before. To begin hunting for bounties in DeFi, you have to be a serious crypto expert and an experienced Solidity/Vyper programmer — both things that don’t just come immediately. For a white hat hacker, there are plenty of standard Web2 platforms offering very competitive bounties, why should they bother researching DeFi?

People misunderstand the challenge of securing protocols. Alpha Homora said that any bounty they could have paid would’ve paled in comparison to the loot at stake. But the goal shouldn’t be to pay hackers what they could steal. That’s a losing proposition. The goal is to attract good-hearted white hat hackers to analyze the project and get paid a legal bounty. A bounty that is less than the millions they could get by exploiting the bug, but one that can still be a life-changing payout. Maybe something like $50,000, $200,000, depending on the situation? That’s likely less than the cost of one audit by a highly regarded firm.

In other news

- 1inch launches yet another “vampire attack” on Uniswap by airdropping free tokens to (some of) its users.

- A startup launches a DeFi-enabled yield app.

- Grayscale could be looking to establish a YFI trust. To be fair, many others like SushiSwap or Chainlink are candidates too.

- Prominent projects back GoodFi, a DeFi education alliance.

- HiFi launches a fixed-rate interest lending protocol.