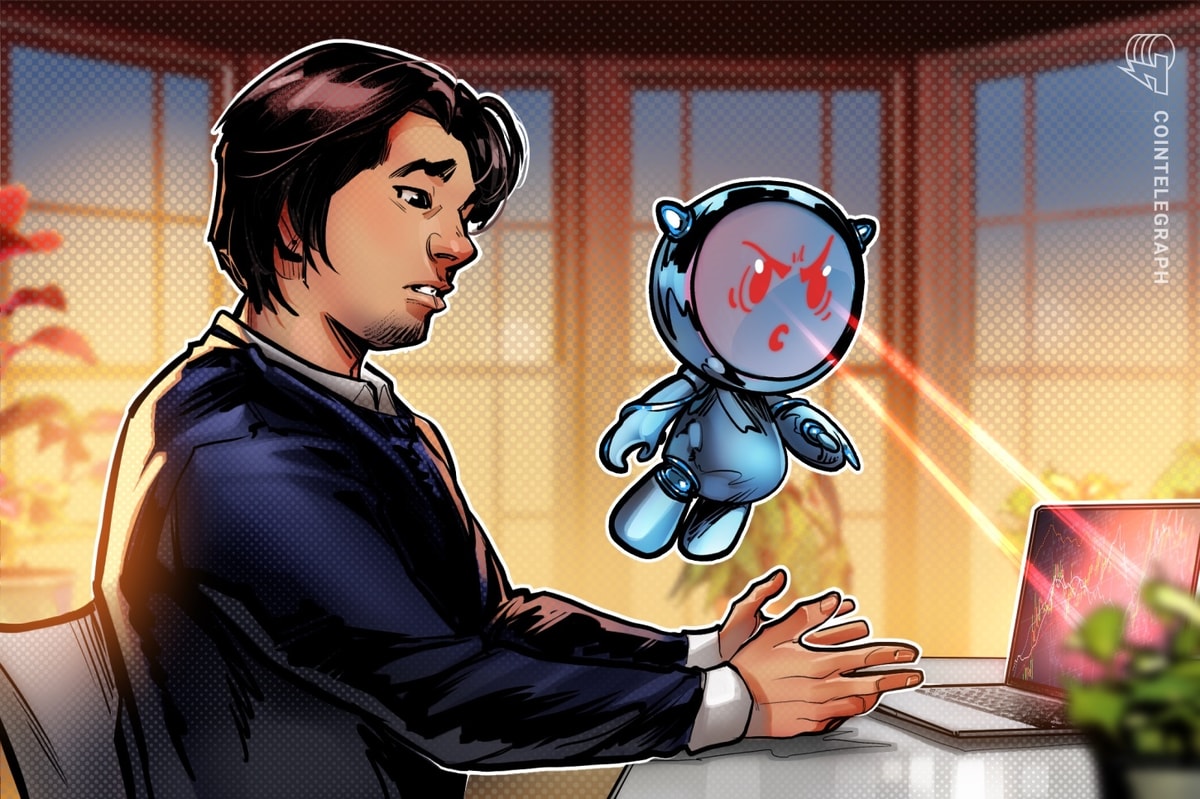

United States Democrat Shamaine Daniels has employed an artificial intelligence (AI) campaign volunteer in her candidacy bid for Congress in the upcoming 2024 elections.

According to a report from Reuters, Daniels is using “Ashley,” which she says is the first political phone banker that is powered by generative AI technology from the developer Civox. Although robocallers have existed for some time, none of Ashley’s responses are pre-recorded.

The report says she is built with technology “similar” to that of OpenAI’s ChatGPT, which allows Ashley to have an infinite number of real-time, customized conversations one-on-one with voters.

Already, the AI caller bot has reached thousands of voters in Pennsylvania as one of Daniels’ campaign volunteers.

The CEO of Civox, Ilya Mouzykantskii, predicted the use of AI callers is going to “scale fast” and said they intend to make tens of thousands of calls a day by the end of the year.

“This is coming for the 2024 election, and it’s coming in a very big way... The future is now.”

Ashley was given a robotic-sounding voice and discloses that she is an AI bot when speaking with voters, although there are no legal requirements in the U.S. that she must do so.

Civox has not disclosed which exact generative AI models it’s using, though it said it’s using over 20 different ones, both open-source and proprietary. The bot was trained using data available on the internet.

Related: German political parties split on how to regulate increasing AI adoption

Ahead of the 2024 elections in the U.S., the usage of AI has become a contested topic. In October, U.S. senators proposed a bill that would punish creators of unauthorized AI-generated deep fakes.

Before that, companies developing AI tools had also implemented safeguards to stop the spread of fake or misleading information. Google has made disclosing AI usage in political campaign advertisements mandatory.

Meanwhile, Meta, the parent company of Instagram and Facebook, banned using generative AI ad-creation tools for political advertisers in early November.

A recent study from the Microsoft Threat Analysis Center, a Microsoft research unit, revealed that AI usage on social media has a great potential to impact voter sentiment.

Another study out of Europe tested Microsoft’s Bing AI chatbot, now rebranded to Copilot, and found that it produced incorrect answers about election information 30% of the time.

Magazine: Real AI & crypto use cases, No. 4: Fight AI fakes with blockchain