We have all seen photos of large data centers hosting mining hardware built from specialized ASICs designed to solve the Bitcoin proof-of-work (a double SHA256 hash.) These data centers tend to be located in places with inexpensive electricity, often where hydroelectricity is plentiful, like Washington State in the U.S. But how much electricity is consumed by these miners? Knowing this helps us to better understand the economics and financial opportunities of Bitcoin mining. Previous estimates have not been very accurate, often making simplistic assumptions. So I decided to conduct the most exhaustive research on this topic that I could. All sources used in this research are listed in my original blog post.

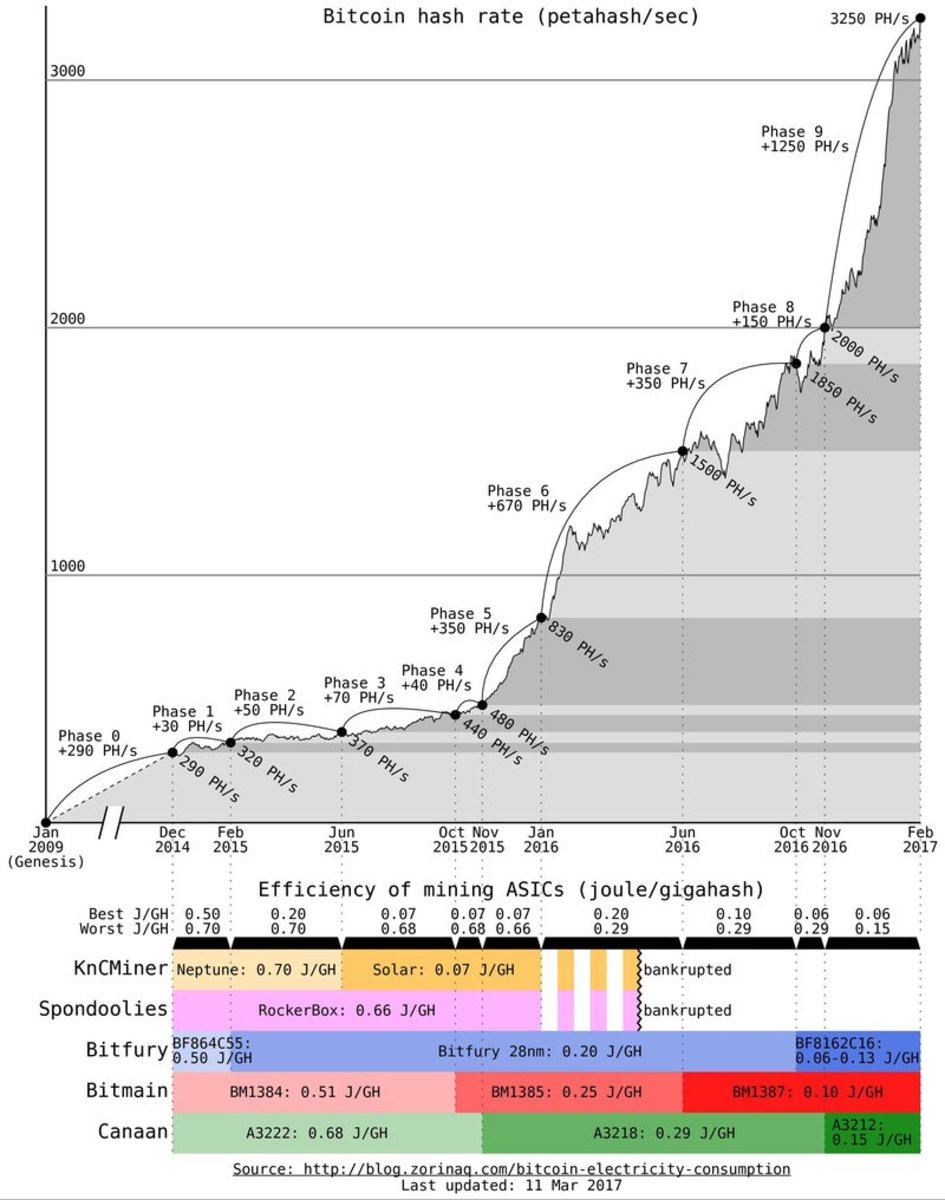

I started by drawing a chart juxtaposing the Bitcoin hash rate with the market availability of mining ASICs and their energy efficiency. This allows calculating with certainty the lower and upper bounds for the global electricity consumption of miners (which is not wasteful in my opinion.)

I split the timeline in 10 phases representing the releases and discontinuances of mining ASICs.

I reached out to some Bitcoin ASIC manufacturers when doing this market research. Canaan was very open and transparent (thank you!) and gave me one additional extremely useful data point: They manufactured a total of 191 PH/s of A3218 ASICs.

Determining the upper bound for the electricity consumption is then easily done by making two worst-case assumptions. Firstly we assume that 100% of the mining power added during each phase came from the least efficient hardware available at that time that is still mining profitably. Secondly we assume none of this mining power, some of it being barely profitable, was ever upgraded to more efficient hardware.

Hardware that is no longer profitable has obviously been retired. As of February 26, 2017 (difficulty = 441e9, 1 BTC = 1180 USD, and assuming $0.05/kWh — half the worldwide average electricity cost) an ASIC is profitable if its efficiency is better than 0.56 J/GH:

1e9*3600 (hashes per hour of 1 GH/s) / (2^32 * 441e9 (difficulty)) * 12.5 (BTC reward per block) * 1180 (USD per BTC) / 0.05 ($/kWh) * 1000 (Wh/kWh) = 0.56 J/GH

So 3 ASICs in the chart are no longer profitable: Neptune, RockerBox, and A3222.

Also, most of the hardware deployed during phase 0—CPUs, GPUs, FPGAs, first-generation ASICs—has not been profitable for a long time, so we make the assumption these miners who deployed during this phase have since then upgraded to the least efficient ASIC available at the end of phase 0 that is still profitable: BM1384.

Furthermore, despite A3218 being the least efficient in phases 5-8 we can only assume 191 PH/s of it were deployed, and the rest of the hash rate came from the second least efficient ASIC: BM1385 (phase 6), Bitfury 28nm (phase 7), or BF8162C16 (phase 8).

To summarize all this, the upper bound estimate leads to the following breakdown of hardware deployments:

- Phase 0: 290 PH/s @ 0.51 J/GH (BM1384)3

- Phases 1-3: 150 PH/s @ 0.51 J/GH (BM1384)

- Phase 4: 40 PH/s @ 0.25 J/GH (BM1385)

- Phase 5: 191 PH/s @ 0.29 J/GH (A3218) + 159 PH/s @ 0.25 J/GH (BM1385)

- Phase 6: 670 PH/s @ 0.25 J/GH (BM1385)

- Phase 7: 350 PH/s @ 0.20 J/GH (Bitfury 28nm)

- Phase 8: 150 PH/s @ 0.13 J/GH (BF8162C16)

- Phase 9: 1250 PH/s @ 0.15 J/GH (A3212)

- Average weighted by PH/s: 0.238 J/GH

Therefore the upper bound electricity consumption of the network at 3250 PH/s assuming the worst-case scenario of miners deploying the least efficient hardware of their time (0.238 J/GH in average) is 774 MW or 6.78 TWh/year.

Now, what about a lower bound estimate? We start with a few observations about the latest 4 most efficient ASICs:

- Bitfury BF8162C16’s efficiency can be as low as 0.06 J/GH. But the clock and voltage configuration can be set to favor speed over energy efficiency. All known third party BF8162C16-based miner designs favor speed at 0.13 J/GH (1, 2). Bitfury’s own private data centers also favor speed with their immersion cooling technology (1, 2, 3). The company once advertised the BlockBox container achieved 0.13 J/GH (2 MW for 16 PH/s), presumably close to the efficiency achieved by their data centers. But we want to calculate a lower bound, so let’s assume the average BF8162C16 deployed in the wild operates at 0.10 J/GH.

- KnCMiner Solar is exclusively deployed in their private data centers and achieves an efficiency of 0.07 J/GH.

- Bitmain BM1387’s efficiency is 0.10 J/GH.

- Canaan A3212’s efficiency is 0.15 J/GH.

As to market share, we know KnCMiner declared bankruptcy and was later acquired by GoGreenLight. They currently account for 0.3% of the global hash rate… a rounding error we can ignore.

Therefore the lower bound electricity consumption of the network at 3250 PH/s assuming the best-case scenario of 100% of miners currently running one of the latest 3 most efficient ASICs (at best 0.10 J/GH) is 325 MW or 2.85 TWh/year.

Can we do better than merely calculating lower and upper bounds? I think so, but with the exception of Canaan, other mining hardware manufacturers tend to be secretive about their market share, so anything below are just educated guesses.

Virtually all of the 1750 PH/s added after June 2016 came from BF8162C16, BM1387, and A3212, with the latter having the smallest market share. So the average efficiency of this added hash rate is likely around 0.11-0.13 J/GH. This represents 190-230 MW.

I would further venture that out of the 1500 PH/s existing as of June 2016, perhaps half was upgraded to BF8162C16/BM1387/A3212, while the other half remains a mixture of BM1385 and A3218. This represent 750 PH/s at 0.11-0.13 J/GH, and 750 PH/s at 0.26-0.28 J/GH, or a total of 280-310 MW.

I believe an insignificant proportion of the hash rate (less than 5%?) comes from all other generations of ASICs. Bitfury BF864C55 and 28nm deployments were upgraded to BF8162C16. KnCMiner/GoGreenLight represents 0.3%. BM1384 is close to being unprofitable. RockerBox, A3222, Neptune have long been unprofitable.

Therefore my best educated guess for the electricity consumption of the network at 3250 PH/s adds up to 470-540 MW or 4.12-4.73 TWh/year.

Economics of Mining

Given the apparent high energy-efficiency, hence relatively small percentage of mining income that one needs to spend on electricity to cover the operating costs of an ASIC miner, it may seem that mining is an extremely profitable risk-free venture, right?

Not necessarily. Though mining can be quite profitable, in reality it depends mostly on (1) luck about when BTC gains in value and (2) timing of how early a given model of mining machine is put online (compared to other competing miners deploying the same machines.) I say this as founder of mining ASIC integrator TAV, as an investor who deployed over time $250k+ of GPUs, FPGAs, and ASICs, and as someone who once drove 2000+ miles to transport his GPU farm to East Wenatchee, Washington State in 2011 in order to exploit the nation’s cheapest electricity at $0.021/kWh— Yes, it was worth it!

To demonstrate the real-world profitability of a miner, I modeled the income and costs generated by an Antminer S5 batch #1 ($418, 590 W, 1155 GH/s) starting from its release date on 27 December 2014, assuming mined bitcoins are sold on a daily basis at the Coindesk BPI, and assuming $0.05/kWh. See income-antminer-s5.csv

The CSV file shows that on its first day an S5 mined 0.01472124 BTC = $4.64, cost $0.71 in electricity, therefore generated $3.93 of income (15% of mining income is spent on electricity.)

The income decreased over time. 1 year and 9 months later, on October 8 2016, electricity costs surpassed income for the first time. By that date the total income was $1021. So a miner who had invested $418 into an S5 would have turned it into $1021, a 2.4× gain. So yes, mining was quite profitable. (However another investor who on 27 December 2014 bought $418 worth of bitcoins would be worth $818 on 8 October 2016, a 2.0× gain. It could be argued that a large reason why mining was profitable came simply from BTC gaining value.)

Some interesting observations:

- By 15 January 2016 84% of the lifetime income of the S5 had been generated; at this point 39% of the daily income ($0.71 out of $1.84) was being spent on electricity.

- By 15 July 2016 99% of the lifetime income of the S5 had been generated; at this point 78% of the daily income ($0.71 out of $0.90) was being spent on electricity, and in total $403 has been spent on electricity which is still slightly less than the cost of the hardware at $418.

The S5 started with electricity costs at 15%, generated a good chunk of its income by 39%, and essentially became worthless beyond 78%.

Summary

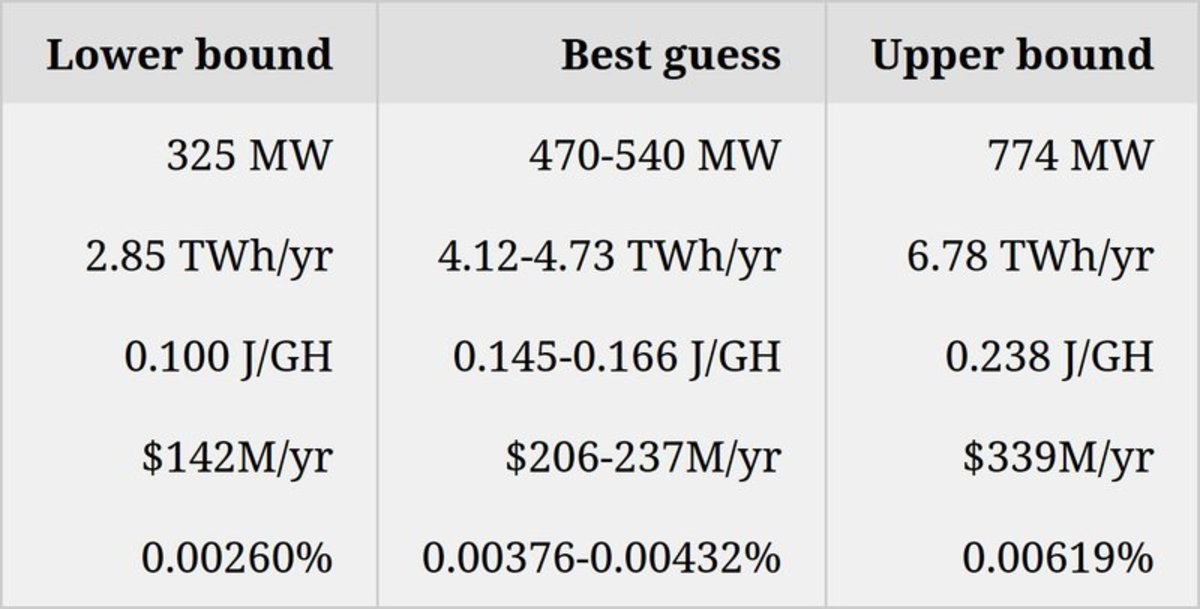

We can calculate the upper bound for the global electricity consumption of Bitcoin miners by assuming they deploy the least efficient hardware of their time and never upgrade it. As to the lower bound it can be calculated by assuming everyone has upgraded to the most efficient hardware. The table below summarizes the electricity consumption of miners, their energy efficiency, annual electrical costs (assuming $0.05/kWh), and percentage of the world’s energy consumption (9 425 Mtoe, or 109 613 TWh, or 12.51 TW, according to IEA statistics for year 2014,) with all numbers calculated as of February 26, 2017:

This may sound like a lot of electricity but when we considering the big picture, I believe Bitcoin mining is not wasteful. Also an interesting comparison to make is that according to a 2008 study from the United States Energy Department’s Energy Information Administration (EIA) these figures are comparable to or less than the annual electricity consumption of decorative Christmas lights in the country (6.63 TWh/year.)

Lastly, when modeling the costs and revenues of a miner over its entire life such as the Antminer S5, we find out that the hardware cost is as high as, if not higher than, its lifetime electricity cost. Therefore a miner’s business plan should not look at the electricity costs alone, and cannot trivialize hardware costs when calculating expected profitability.

This is a guest post by Marc Bevand. The opinions expressed are his own and do not necessarily reflect those of Bitcoin Magazine.