The new Nvidia-beta driver package that allows for clock, memory, and voltage adjustments released this week, and I thought it would be interesting to see just how far we can push the EVGA GTX 1070’s Ethereum hashing now that we have full control over the hardware. If you read our first review of the GPU, you’ll know that the card stands on its own as a power-efficient piece of equipment, but just how far a little optimisation goes may surprise you.

Read also: TradeZero Announces DarkPool

Testing Methodology

Miner Benchmarking is worlds away from your typical hardware testing. Manufacturers, architectures and even board and cooler designs can introduce incredible variance in how the tested device performs. As our goal is to inform you on what you can expect from a given mining setup should you choose to purchase it, we make sure to give each GPU or ASIC a good environment through optimisation, even if that means a few variable may change card-to-card. We chose this approach because in the real world, miners optimise heavily for their hardware, and it simply isn’t a one-testbed-fits all scenario. Here are the variables we observe and how we control for them.

What Changes:

What Changes:

OS

Drivers

Mining Software

Fan Speeds/Profiles

What Does Not:

Test Bed Hardware

Card Bios

Core/Memory Clock Speed

Benchmark Data Collection

With that out of the way, here is the system information on our EVGA Gaming SC ACX 3.0 GTX 1070 Benchmark:

CPU: i7-6700k @ 4.3 GHz

RAM:16 GB DDR4 2400

PSU: 700W 80+ Titanium PSU

SSD: 120 GB Kingston SSD

GPU (Tested Component): EVGA Gaming SC ACX 3.0 GTX 1070

OS: Arch Linux, Kernel 4.7.0-1-ARCH (headless to improve GPU overhead)

Ethereum Client/Miner: C++ Implementation of Ethereum, version 1.2.9, Protocol Version 63

Driver: NVIDIA driver version 370.23-linux-x64, CUDA 7.5

Case: Corsair 200R (We run the benchmarks in a closed case to simulate less-than-ideal thermal conditions common to mining setups.)

390X Ethereum Mining Performance on a (Power) Budget

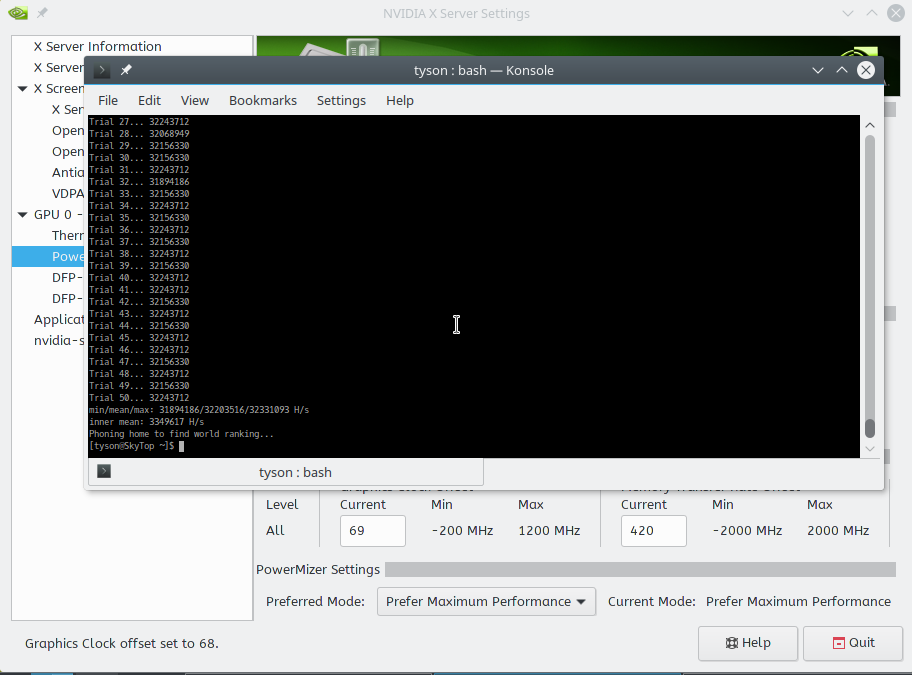

Our last run put the 1070 slightly ahead of the stock 480 with a lower power draw from the wall. After several avenues had been pursued to squeeze every last bit of Ethereum hashpower out of our EVGA test GPU, we came out with an optimized configuration that hashes at 32.4 MH/s, pulling 146 watts under load, and getting up to a maximum of 63 degrees in out test bed case.

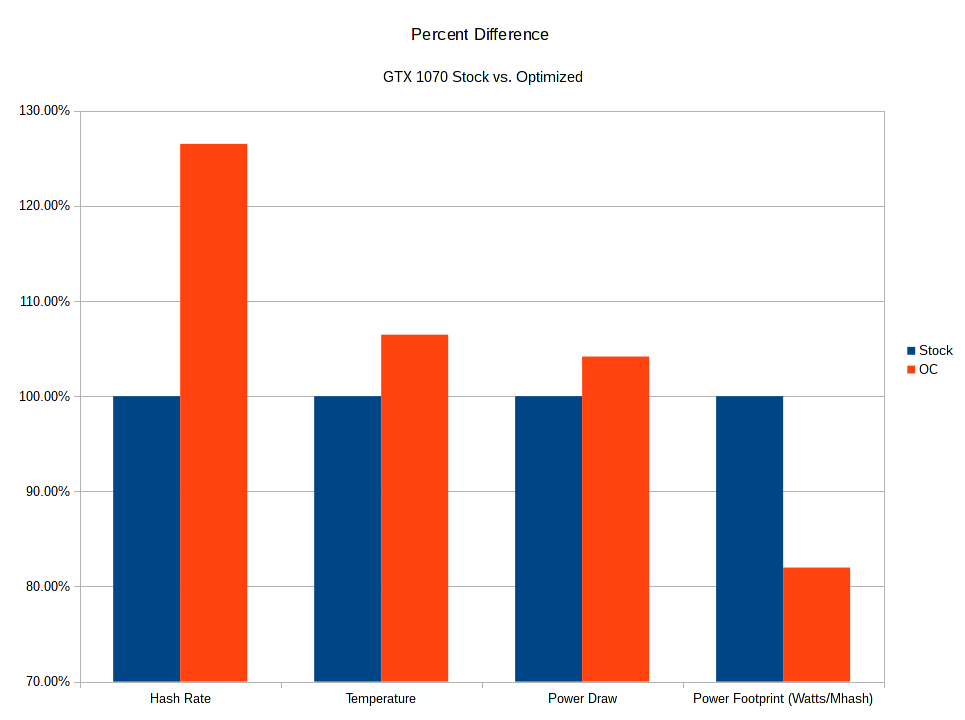

For reference, a 390X does about the same numbers while drawing more than 350 Watts. Where efficiency’s concerned we’re looking at an entirely new league with the 1070. Here’s a look at our testing runs at stock and optimized configuration:

The trick to getting it to this level of performance was stripping out the GUI. Without a window manager or graphical back-end clogging up the compute queue of this beast, we could get a little crazy with our memory overclock – we got the GDDR5 chips up to 9570 Mhz, put a modest offset on the core clock, and undervolted until we hit the threshold of stability. After dialing the voltage back up to something we were slightly more comfortable with, we ran it through the gauntlet – And the results make it abundantly clear just how much optimization headroom this GPU has under the hood When it comes to Ethereum mining.

The trick to getting it to this level of performance was stripping out the GUI. Without a window manager or graphical back-end clogging up the compute queue of this beast, we could get a little crazy with our memory overclock – we got the GDDR5 chips up to 9570 Mhz, put a modest offset on the core clock, and undervolted until we hit the threshold of stability. After dialing the voltage back up to something we were slightly more comfortable with, we ran it through the gauntlet – And the results make it abundantly clear just how much optimization headroom this GPU has under the hood When it comes to Ethereum mining.

Our efforts yielded a 27 percent increase in hash power with just a 6 percent increase in thermal footprint and a 4% power draw increase. Not only that, but the 1070’s power footprint comes down about 18%, to just 4.6 Watts/MegaHash. This new information makes the value proposition of a 1070-based rig much more competitive in the current market. Whether it becomes the best of the bunch is largely up to what we can squeeze out of our other GPUs, but for now, the 1070 is looking like a much stronger contender.

Questions about ETH mining or our methodology? Be sure to leave them in the comments!